Summary:

Robots.txt determines which bots can access your site and what they can crawl—impacting everything from Google rankings to how your content is used in AI tools like ChatGPT, Claude, and Perplexity. Allowing the right bots supports discoverability, citation, and organic reach. Blocking the wrong ones can erase your presence from key channels. As AI adoption grows, this simple file has become a high-stakes visibility lever.

Most of us know the robots.txt file as a basic SEO housekeeping tool: a simple text file that tells bots what they can and can't crawl on your site. But in the age of AI, it’s become something more. Your robots.txt file now plays a critical role in shaping how your brand appears (or doesn’t) in generative search, AI assistants, and large language models (LLMs).

It’s no longer just whether you can be indexed by Google. Your website is crawled by a growing cast of AI bots—from OpenAI, Anthropic, Perplexity, and others, all looking to access high-quality content for retrieval or model training. Your robots.txt file acts as the gatekeeper: if these bots aren’t allowed to crawl your site, your content won’t be cited, summarized, or included in the datasets powering answers in AI tools. That means your brand may be missing from the conversations your audience is having with AI.

In this guide, we’ll explain what robots.txt really does, why it matters more than ever, and how brands can approach bot visibility strategically. Whether you're a technical SEO or a CMO making strategic decisions about AI, this guide will help you make sense of what to allow, what to block, and why your decision matters.

A quick refresher: what is robots.txt and why does it matter?

The robots.txt file is a simple set of instructions that sits at the root of your website. It’s the first thing most bots check when they visit your site. It doesn’t control what can be indexed—that’s handled with meta tags—but it does determine what can be crawled.

Why does this matter? Because crawling powers discovery. If a bot can’t crawl your content, it can’t learn from it, serve it in a result, or attribute information back to your brand. That means you could be invisible to the very tools your buyers are using to make decisions.

Why this now matters more than ever:

- Bots from LLMs are actively crawling the open web to improve their responses

- Generative AI platforms rely on trusted source material to answer questions

- Sites that are crawlable are more likely to be cited, summarized, and picked up in SERP features like AI Overviews and tools like Perplexity

Your robots.txt file is now a gatekeeper to your participation in AI-powered search.

Opening vs. blocking: a strategic decision

While there are a lot of upsides to allowing all bots to access your site, some brands are choosing to block AI bots. Media companies and content publishers concerned with copyright, content reuse, or monetization models often fall into this camp.

Blocking AI bots may protect content, but it also cuts off opportunity.

Unless you're a major publication with a monetized gated community, blocking is likely not the best approach for your brand.

By allowing trusted LLMs and search bots to crawl your site, you increase your chances of:

- Being cited in ChatGPT responses or AI-generated summaries

- Being selected as a source for Perplexity or Gemini

- Having your products or services discovered earlier in the journey

It’s a strategic choice—but one with real implications for your future visibility.

Considerations before you open the gates

Before you throw open the doors to every crawler, there are some very real considerations, and depending on the size of your organization and stakeholders, this could take time. Below are some questions every brand should be asking:

- Do we have proprietary content we want to protect? Keep client portals, internal tools, and sensitive product data off-limits.

- Is our messaging up to date and unified? LLMs can only learn what’s available. If your site is inconsistent or outdated, the wrong story may get amplified.

- Are our most important pages easily discoverable and crawlable? A clear structure with optimized content and internal linking maximizes what AI bots can actually see and understand.

The strategic upside of opening your txt to LLMs

When your site is crawlable to the right bots, you:

- Increase brand surface area across LLMs and AI assistants

- Improve entity recognition (so your brand is understood in the context of your industry)

- Support accurate citations and summaries across tools like Perplexity, ChatGPT, and Gemini

- Lay groundwork for zero-click visibility in AI Overviews, answers, and assistant replies

AI tools are changing how users find, trust, and engage with brands. Openness fuels your inclusion in that future.

Learn more about entity optimization →

Example: open vs. closed robots.txt

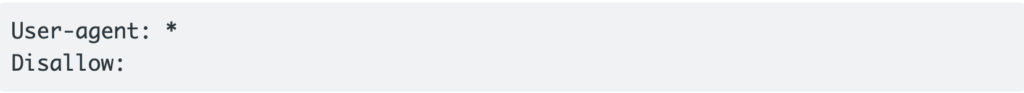

Open robots.txt (default crawl-friendly setup):

This allows all bots to crawl all parts of the site.

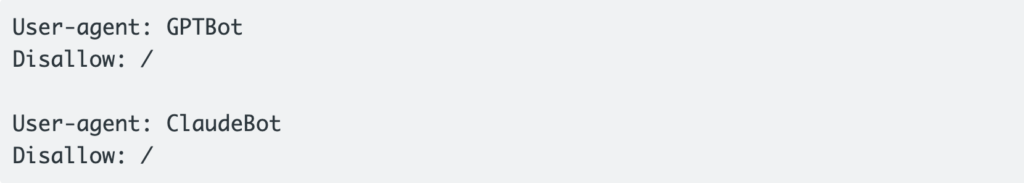

Closed to LLM bots (selective blocking):

This blocks OpenAI and Anthropic from crawling your content while leaving the rest open.

Important note: Blocking Google, Bing, or other major engines using Disallow: / will prevent them from crawling your entire site. If you're not absolutely sure what you're doing, don’t guess. You could wipe your site off the face of the web if set incorrectly.

How does this fit into our C.L.A.R.I.T.Y. framework?

Within our C.L.A.R.I.T.Y. framework, robots.txt plays a role early, but its impact carries through:

- Crawl: If bots can’t access your content, nothing else matters. We ensure LLMs and search engines can crawl the right parts of your site.

- Learn: What are these models picking up about your brand? Crawlability is step one, but accuracy comes from great content and consistent structure.

- Integrate: We help clients align their SEO and AI visibility strategies—so your brand shows up clearly across both traditional and generative search.

We audit crawl access, run bot access diagnostics, and help brands think proactively about what they want to expose and what they want to protect.

Discover the C.L.A.R.I.T.Y framework →

Which bots to allow (and block)

| Bot Name | User-Agent | What It Crawls | tiptop Recommendation |

| Googlebot | Googlebot | Google search index | ✅ Allow |

| Bingbot | bingbot | Bing + Microsoft Copilot | ✅ Allow |

| GPTBot | GPTBot | OpenAI / ChatGPT | ✅ Allow |

| ClaudeBot | ClaudeBot | Anthropic / Claude | ✅ Allow |

| GeminiBot | Google-Extended | Google Gemini LLM training | ✅ Allow |

| PerplexityBot | PerplexityBot | Perplexity.ai index + citations | ✅ Allow |

| CCBot | CCBot | Common Crawl (used by many LLMs) | ✅ Allow |

| Amazonbot | Amazonbot | Amazon Alexa + other product crawlers | ✅ Allow (case-by-case) |

| Applebot | Applebot | Siri + Apple services | ✅ Allow |

| Meta Agent | Meta-ExternalAgent | Facebook, Instagram, Threads previews | ✅ Allow |

| X / Twitterbot | Twitterbot | Link previews for X (formerly Twitter) | ✅ Allow |

| YouBot | YouBot | You.com assistant + generative search | ✅ Allow |

| ByteSpider | ByteSpider | TikTok / ByteDance data collection | ⚠️ Caution / Evaluate |

| AhrefsBot | AhrefsBot | SEO tool crawler | ⚠️ Optional |

| SemrushBot | SemrushBot | SEO tool crawler | ⚠️ Optional |

| AllenAI Bot | ai-crawler | AI research via Allen Institute | ✅ Allow |

| DuckDuckGo Bot | DuckDuckBot | Privacy-based search engine | ✅ Allow |

What about llms.txt (Yes, it's a thing—kind of)

You may have seen chatter about llms.txt, a proposed file designed to let site owners control how large language models access and use their content. It’s meant to be a more explicit signal for training and inference permissions across AI models. It sounds promising, but as of now, it’s not an industry standard. Major models like OpenAI’s GPTBot and Anthropic’s Claude still reference robots.txt, not llms.txt.

That could change in the future. But for now, your best bet is to keep your robots.txt file tightly dialed in. That’s what the big players are listening to—and it’s where visibility decisions are happening today.

Implementation and monitoring

Here’s how to implement a smart robots.txt strategy:

- Audit your current file: Use Google Search Console or tools like Screaming Frog to verify what’s blocked.

- Test before launch: Use Google’s robots.txt Tester to ensure syntax is correct.

- Use the right syntax: A single typo can break your file. Stick to clear User-agent and Disallow/Allow rules.

- Segment by bot: Don’t apply blanket rules. You can allow some LLMs while blocking others.

- Revisit quarterly: New bots are emerging fast. Reevaluate every few months to ensure you’re aligned with your visibility strategy.

- Watch your crawl logs: Use server log data to monitor which bots are hitting your site and what they’re accessing.

- Check your impact: Tools like Perplexity’s “Sources” tab or ChatGPT’s web-browsing citations can help confirm if your brand is showing up.

How discoverable is your brand on AI? Let us run an AI audit. Let’s talk→

A note of caution: don’t guess with robots.txt

It’s deceptively simple—but extremely powerful. One wrong line can block your site from Google entirely. If you're not confident editing robots.txt:

- Involve your SEO partner or technical team

- Use verified tools for testing

- Always keep backups of prior versions

Your robots.txt file is a strategic visibility layer

Robots.txt has always mattered. But now, it’s part of a larger strategic play for visibility across AI tools, search engines, and assistants.

Done right, it helps ensure your brand is not only crawlable, but cited, learned from, and trusted by the systems shaping the next generation of search.

Let’s make sure you’re open to the right bots—and closed to the rest.

Need help with implementation or guidance on which bots align with your goals? Let’s talk.

You might also like: